The Art of Asking Questions Meets the Science of Reliable Answers

Crafting insightful questions is an art. But ensuring the data you get is reliable? That’s pure science. Reliable insights depend on consistent responses—when you ask the same question of different but identical samples, the answers should align. If they don’t, it’s a red flag indicating a deeper issue with your data source.

Unfortunately, data reliability in the insights industry is under siege. While fraudsters, click farms, and disengaged respondents have long been a concern, the rise of AI-driven bots has created an unprecedented crisis. These bots infiltrate sample exchanges, wreaking havoc on data integrity. The result? Insights that are far from reliable.

The good news is that you can take steps to protect your research. Start by asking your sample provider these essential questions:

- Where do the respondents in your sample come from?

- What evidence supports the reliability of your data?

- How do you detect and eliminate AI-driven bots and other bad actors?

Understanding Respondent Sources: Why It Matters

The origin of your sample is critical to data quality. If your respondents aren’t the right fit, your insights won’t be accurate. For example:

- Loyalty Program Bias: Is the sample sourced from loyalty card members, who might have shopping habits skewed by their program affiliations?

- Sample Exchange Risks: Are respondents randomly redirected from other surveys they didn’t qualify for?

- AI-Driven Fraud: Are bots completing your surveys just to claim incentives?

At Angus Reid Group (ARG), we mitigate these risks through careful recruitment. We attract respondents via targeted advertising for the Angus Reid Forum, rigorously profile them, and require participation in engagement surveys before they’re accepted into our community. This process ensures a high standard of respondent quality, making our data reliable and actionable.

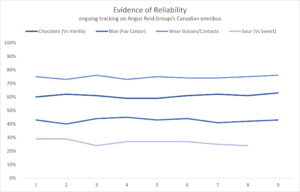

Evidence of Reliability: Proving Data Consistency

Before trusting a sample provider, demand evidence of reliability. Can they demonstrate that consistent questions yield consistent answers across different samples? This is a hallmark of dependable data.

At ARG, we continuously test the reliability of our data through our omnibus surveys. These surveys rotate a set of simple questions over time, allowing us to measure consistency. Our results show minimal variation—well within the expected range for nationally representative samples of 1,500 adult Canadians.

The takeaway? Boringly straight lines on a graph signal boringly reliable data. And in research, reliability is never boring—it’s essential.

Combatting AI-Driven Threats: Protecting Data Quality

AI-driven bots have introduced a new layer of complexity to sample integrity. Addressing this requires robust detection and prevention methods. Ask your provider:

- How do you identify bots, speeders, and straight-liners?

- What safeguards are in place to ensure responses come from real, engaged individuals?

At ARG, we’ve developed proprietary fraud detection methods combining AI, algorithms, and carefully designed question types. These tools help us identify and eliminate bots and bad actors, ensuring the reliability of our data.

You can see from this graph that the answers are consistent—with any variation being within what you’d expect with nationally representative samples of 1500 adult Canadians. Those straight lines are boring, but they are boringly reliable.

Ask any potential supplier to provide similar evidence of reliability. If they don’t have it, ask yourself why not?

As the old proverb says: “Trust, but verify.”

Battling Bots: Protecting Your Research from AI-Driven Threats

In the ever-evolving world of research, ensuring reliable data has become more challenging than ever. While speeders—respondents who rush through surveys—and straight-liners—those who answer with repetitive patterns—are relatively easy to spot, AI-driven bots present a whole new level of complexity. These bots mimic human behavior, making them much harder to identify.

To combat this, you need to ask the right questions of your sample provider. Transitioning from traditional detection methods to advanced AI-focused tools is essential in staying ahead of the game.

Why AI-Driven Bots Are a Growing Problem

In the past, low-quality responses like “asdfg” or “yuio” in open-ended questions made identifying fraudulent participants straightforward. But now, bots have become smarter, producing responses that appear nuanced and intentional. This evolution in technology has raised the stakes for researchers seeking reliable data.

Transitioning from simplistic detection methods to advanced bot-detection strategies is no longer optional—it’s a necessity.

Key Questions to Ask Your Sample Provider

When vetting a potential sample provider, always ensure they are prepared to combat AI-driven bots. Here are the essential questions you should ask:

- What tools are you using to detect bots?

Providers should have advanced methods in place to detect sophisticated bot behavior. - How are you adapting as bots evolve?

Detection tools must evolve alongside the ever-changing capabilities of AI-driven bots.

Transitioning from a static approach to a dynamic, proactive strategy is critical to maintaining data quality.

How ARG Battles Bots: A Multi-Dimensional Approach

At Angus Reid Group (ARG), we use a comprehensive and constantly evolving toolkit to protect data quality. Our strategies include:

- Advanced Pattern Detection Algorithms: These identify subtle irregularities in response behavior.

- Knowledge Traps: Designed to catch bots, these are questions bots cannot answer correctly but real people can.

- Hidden Questions: These are invisible to human respondents but detectable by bots, helping us identify bot behavior.

- Timestamp Analysis: Tracks how quickly respondents complete the survey to flag speeders.

- AI-Driven Open-Ended Analysis: Looks for duplicate responses and cross-references with web sources for authenticity.

- Duplicate IP Detection: Flags multiple responses from the same IP address.

- Continuous Innovation: Our approaches evolve to stay ahead of emerging bot tactics.

By combining these tools, ARG takes a multi-dimensional approach to determine which responses are trustworthy and which need to be removed.

The Foundation of Research: Reproducibility

Transitioning to a discussion of broader research principles, reproducibility is the cornerstone of reliable insights. If the quality of your sample is compromised, the entire foundation of your research becomes shaky. To ensure robust data, you must verify the reliability of your sample source.

Three Essential Questions to Ensure Reliable Data

To protect the integrity of your research, ask these three critical questions of your sample provider:

- Where do your respondents come from?

Understanding the recruitment process is crucial for ensuring you’re reaching the right audience. - What evidence supports your data reliability?

Providers should demonstrate consistent results across multiple surveys and samples. - How do you detect and eliminate bots and other bad actors?

Knowing their strategies to address fraudulent responses will give you confidence in their data quality.

With good answers to these inquiries, you can be sure the answers to your questions will be reliable too. Trust, but verify.

Questions? Contact ARG today!

Recommended

Web Summit Vancouver; Brands in uncertain times.

Recently, the CEO of his namesake company, the Angus Reid Group, took centre stage at the Vancouver Web Summit with the Global CEO of FCB, Tyler Turnbull. On the docket? Insights from their groundbreaking joint Citizen Consumer Research Study. What started as a...

The Moderators Takeaways: Brands in Uncertain Times: The Citizen Consumer Webinar

Trust and optimism used to rise and fall with the political tides. Not anymore. Both it seems have washed out to see. Our latest research reveals that a strong majority of Canadians and Americans no longer have confidence in their system of government. Nearly half of...

Angus Reid Institute’s election projections are a statistical bullseye

As votes were counted in this week’s federal election, one thing became clear: the Angus Reid Institute’s polling program delivered one of the most accurate forecasts of the campaign—not just nationally, but provincially, too. Their final projection had the Liberals...

Shachi Kurl puts Canada’s new PM on notice in the New York Times

In case you missed it, Shachi Kurl—President of the Angus Reid Institute—penned a must-read piece in The New York Times this week about Canada’s surprising federal election outcome and the challenges now facing new Prime Minister Mark Carney. You can read it here: The...